Faraday Future’s FF91 car out by year’s end. Thanks to V2X, robocars are cellphones on wheels. A free-wheeling NI Week keynote panel talks about self-driving cars issues.

A physicist, a semiconductor salesman and a lawyer all meet on a stage at NI Week on Wednesday. Sounds like the beginning of a bad joke involving a bar. Instead it was National Instruments’ keynote panel on the self-driving car. Strangely enough the three disparate speakers had a lot in common. They’re all basically highly educated engineers, for one thing.

Two of them also got into engineering because they wanted to DJ and tinkered with equipment related to music and audio.

The panelists were Richard Aspinall, manager of powertrain manufacturing at Faraday Future, Bryant Walker Smith, assistant professor at the School of Law, University of South Carolina and Kamal Khouri, vice president of Advanced Driver Assistance Systems, NXP. National Instruments’ Eric Starkloff moderated the panel.

One thing the panelists agreed upon right away: the recent Uber accident in Arizona was about the failure of test environment, not production-ready software. In the accident, in which a pedestrian, albeit an oblivious pedestrian, was killed by a self-driving car that didn’t stop when it could have. It was a test failure, said the panelists.

“Crashes are inevitable, failures are inevitable, but that doesn’t mean that a specific failure or crash is inevitable or unavoidable,” says Smith. “While we can critique the hardware and software performance in case of the Uber fatality, ultimately because that was a test, that was a failure of the testing program because the software and hardware are not expected to be production ready at that point.”

“I agree with Bryant, it was a test failure,” said Khouri. “It should not hinder our ability to move forward.”

Makes one wonder why Uber wasn’t testing the production software on public roads.

Another agreement: There are no driverless cars on the road now. It is the biggest public misperception now about self-driving cars. At this stage, a person is always in the car in control of the vehicle, although it didn’t seem to help in the Uber crash, which showed on video a very distracted test driver. The technology is either driver assistance technology or test vehicles with test driver supervising today. “There’s a big difference between getting on a plane and hearing the pilot says ‘Hi folks, I will be using autopilot today’ and ‘Hi you will be using autopilot today. I am getting off,’” said Smith.

Cellphone on wheels

The panelists naturally agreed on the importance of safety, and not just in how robocars drive but how secure they are from malicious attacks. Vehicle hacks are a problem and on the rise. “We have had 25 notable and documented hacks since 2015, the largest of which actually affected 1.4 million vehicles costing about a billion dollars,” said Khouri in his opening presentation.

The immense complexity of the code inside the vehicle coupled with its connection to outside infrastructure (V2X) and to other cars will invite malicious hackers with many entry points to collect the data that our cars produce. “The sheer system complexity — the number of lines of the code — makes it vulnerable to hacking,” said Khouri.

“With V2X every car becomes like a cell phone,” said Khouri. “Our duty: protect privacy, prevent unauthorized access.” He reminded people that the vehicles can be used as weapons, as seen in recent terrorist attacks.

(Left to right) Moderator Eric Starkloff (National Instruments) questions panelists Richard Aspinall (manager of powertrain manufacturing at Faraday Future), Kamal Khouri (vice president of Advanced Driver Assistance Systems, NXP) and Bryant Walker Smith, (assistant professor at the School of Law, University of South Carolina) during NI Week keynote panel on autonomous vehicles. (Image courtesy of NI’s keynote live stream.)

Vehicle to infrastructure (V2X) is where the wireless industry most intersects with autonomous vehicles. It has issues beyond just the technical challenge of getting the network to perform fast, with low-enough latency. It’s almost as if the network will become a real-time operating system, such as you would see in any safety-critical embedded system. Regardless, the self-driving car and its V2X infrastructure all requires an immense amount of computing, says the Khouri.

“We think of self-driving cars as three components: sensing, thinking, and acting,” Khouri said. Sensing develops a model of the world around the car (obstacles — moving or stationary — around the vehicle. Next whatever is driving the car has to crunch data and you want to do to get to the object, think about the data and make a decision, then act upon it.

Decisions have to be based on safety decisions, following three dimensions of safety: functional, behavior and environmental safety. Is the car behaving the way a driver should, is it driving safely for the current conditions?

“Safety, security, reliability is the promise we have to give our customers,” said Khouri.

Don’t ask if the car is safe, advised Smith, the engineer-turned-lawyer, because cars will always be as safe as the last accident. “Trustworthiness is everything,” advised Smith. His advice to the companies developing these technologies: be trustworthy, have credibility. Share your safety philosophies — explain why you think the product is reasonably safe. Keep promises. Share success and failures. In event of failures, make things right.

As consumers and regulators, we need to “ask if the companies developing the technologies are worthy of our trust.” Smith co-authored the SAE levels of automation used in automated driving.

The emergence of compliance and testing standards needs to happen, agreed the panelists. “We are starting to see the emergence of standards,” said Khouri, “even on the API level, how to do sensor fusion and how to test that.” Standards processes also provide an important industry forum for sharing and learning, necessary for developing safety standards.

“I can say as manufacturers point of view, we are desperate to have those standards,” said Aspinall, “that guidance really helps us to focus in on what we are trying to do to sell that car and be confident as best as we can.”

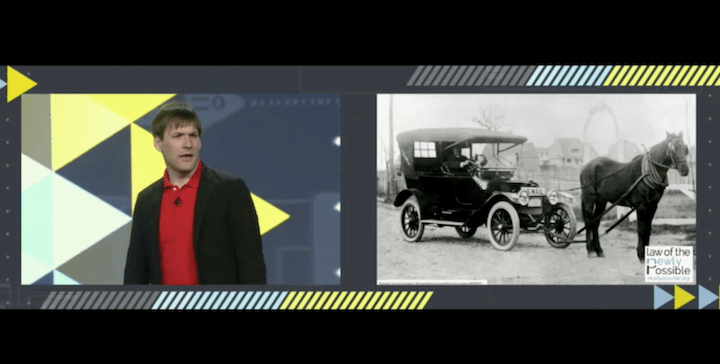

Bryant Walker Smith (above) reviews the history of early automotive startups and how regulations affected drivers, during his opening presentation at a 2018 NI Week keynote on autonomous driving. (Courtesy of NI Week’s streaming video.)

Don’t get complacent, Smith warns. “It takes a little longer for everyone to solidify around one standard for safety,” but we need to be aware there may be better standards out there and the industry needs to find and adopt them, quickly.

Smith warned the future is coming but it won’t be what we think it will be and it will take longer to get here. Engineers both solve and cause problems: “we take one set of problems and replace that with a new set of problems and we just really, really hope that our new set in aggregate is smaller than our old set.” He said this will be true with automated driving as well.

As we develop the autonomous vehicle, look at what laws make sense. “Do we look for permission or do we ask for forgiveness?” says the Smith. We need space for new regulatory approaches. “One size will not fit all.” All of our products in the future will be more like services than products — a regulatory challenge, says Smith. “The key is to create space for innovation not just in automated driving technologies themselves but in safety assurance approaches.”

A peek at Faraday Future

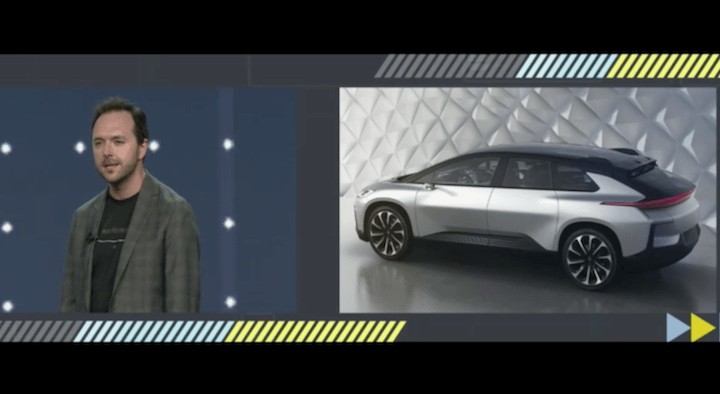

Although not a self-driving car, Faraday Future’s electric vehicle has some buzz around it. Aspinall, the physicist-turned-engineer, spouted off numbers on the Faraday Future’s electric vehicle FF91, an SUV that goes top speed 150 MPH, goes 0 to 60 in 2.39 seconds, motors more than 4,000 + lbs of torque within 50 milliseconds, 1,000 horse power and battery will last 380 miles. “They are coming by the end of the year,” Aspinall said. “We are in the final stage of production and verification tests.”

Richard Aspinall, manager of powertrain manufacturing at Faraday Future talks about Faraday’s FF91 (pictured), due out at the end of the year. He was a panelist at NI Week’s Wednesday 23rd keynote on the self-driving car. (Courtesy of National Instruments)

Aspinall manages the manufacturing validation and test of the powertrain system, including batteries and electric motors.

Faraday Future is an American startup with backing from funds and individuals in Hong Kong and China. Its plans to open a battery plant in Nevada got a rocky start with questions about funding, but now the funding is back as of late 2017. And, as witnessed by this keynote, Faraday has a car in test now. “We have produced over 100 cars just verifying it,” said Aspinall.

Aspinall describes working for startup Faraday Future as fun. “I have a lot of fun working at a startup.” He talks about the flow of ideas and contagious enthusiasm. He also talks about the vibrant, competitive scene of a startup. “There’s a lot of borrowing, stealing of ideas and people. There are lawsuits all over the place.”

Radar vs. Lidar? “The jury is out,” said Khouri. “Each sensing modality has its weakness.” Cameras give a human view of the world but it bad at depth perception and punching through rain or fog. Radar can see through fog, estimates distance and see around corners but does not have the resolution of a lidar or a camera does. “Today all three of these modalities need to be used and whether it is primary or secondary, all the OEMs have their secret sauce,” said Khouri.

Will autonomous ride sharing be the first use case market? Although “the future won’t be one thing but lots of things,” said Smith, it is likely managed fleet deployments in very specific conditions will be the first step. “Slow speeds, simple environments and semi-supervised operations: when we get two of the three is when we will see these fleet deployments,” said Smith.

The automotive market in general “is ripe for economic disruption,” said Faraday’s Aspinall. “Faraday is looking at subscription models,” said Aspinall. Because cars are expensive to maintain, he sees more subscriptions or leasing of cars in the future. At the fleet level, money can be saved using predicative maintenance that will happen by connecting to the internet.

Then the fun question: Who is liable when autonomous cars crash? “If two cars crash today, that question arises,” Smith said. It always depends on specific laws applied to specific facts. “It is something we muddle through today and will muddle through in the future.”

Smith added a word of caution: “These technologies will create so much new power over the public and even over regulators: with that power comes great responsibility.”

The full panel video is available here.

The post Uber crash a failure of test environment, says NI Week panel appeared first on RCR Wireless News.